IoT sensing is a critical components of the Internet of Things (IoT) ecosystem. It is the process of capturing data from physical sensors, such as temperature, humidity, or light sensors, and transmitting that data to a central system for processing and analysis. IoT sensing is used in a variety of applications, from smart homes and buildings to industrial automation and healthcare. In digital assurance IoT testing plays a vital role so as the IoT sensing.

From IoT sensing to cloud

The IoT sensing architecture consists of three main components: sensors, gateways, and cloud platforms.

Sensors are physical devices that capture data from the environment. They can be wired or wireless and come in various forms, such as temperature sensors, humidity sensors, pressure sensors, and motion sensors. Sensors also can include cameras, microphones, and other types of sensors that capture data in different formats.

Gateways are the intermediate devices that receive data from sensors and transmit that data to a cloud platform for processing and analysis. Gateways can perform data filtering and aggregation, as well as provide security and connectivity to different types of networks.

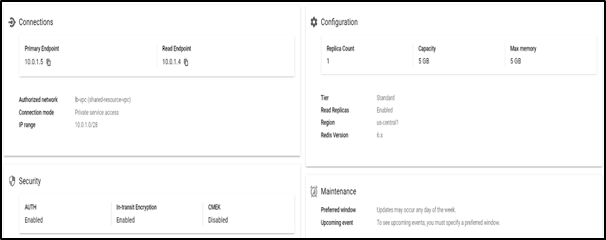

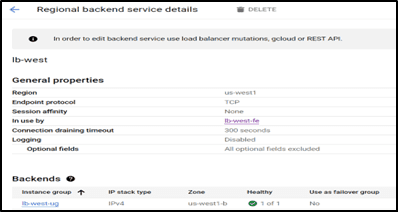

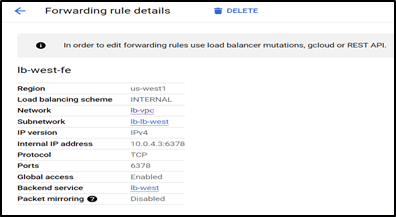

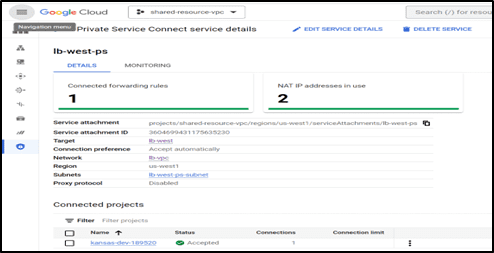

Cloud platforms are the central systems that receive and process data from sensors and gateways. Cloud platforms can store data, run analytics, and provide dashboards and visualizations for end-users. Cloud platforms can also integrate with other systems, such as enterprise resource planning (ERP) or customer relationship management (CRM) systems

Here are a few examples of IoT sensing protocols

- MQTT – One commonly used protocol for IoT sensing is the MQTT (Message Queuing Telemetry Transport) protocol. MQTT is a lightweight, publish-subscribe messaging protocol designed for IoT applications with low bandwidth, high latency, or unreliable networks.

- CoAP – It can be used for IoT sensing CoAP (Constrained Application Protocol), which is designed for constrained devices and networks. CoAP uses a client-server model, where the client sends requests to the server and the server responds with data.

An example of using CoAP for IoT sensing could be in a smart agriculture system. Let’s say we have a soil moisture sensor in a field that sends data to an IoT platform using CoAP. The sensor would send a request to the CoAP server on the platform, asking for the current soil moisture level. The server would initially respond with the data, which the platform could use to determine if the crops need watering.

There are many other protocols that can be used for IoT sensing, including HTTP, WebSocket, and AMQP. The choice of protocol depends on the specific requirements of the application, such as the level of security needed, the amount of data being transmitted, and the network environment.

Also read: IoT Testing Approach on Devices

IoT Sensing Use Cases

IoT can be used in a variety of applications, such as Smart Homes and buildings, Industrial Automation and Healthcare.

- In smart homes and buildings, IoT sensing can be used to control heating, lighting, and ventilation system based on environmental conditions. For example, a temperature sensor can be used to adjust the heating system based on the current temperature, while a light sensor can be used to adjust the lighting system based on the amount of natural light.

- In healthcare, IoT sensing can be used to monitor patients and improve patient outcomes. For example, a wearable sensor can be used to monitor a patient’s heart rate and transmit that data to a cloud platform for analysis. The cloud platform can then alert healthcare professionals if the patient’s heart rate exceeds a certain threshold.

IoT Sensing and Predictive Maintenance

The Internet of Things (IoT) has revolutionized predictive maintenance by enabling real-time monitoring and analysis of equipment and systems. By deploying IoT sensors, organizations can collect data on various factors, including temperature, vibration, energy consumption, and more. This data is analyzed using machine learning algorithms to identify patterns and anomalies that can predict equipment failure.

Predictive maintenance in IoT sensing has numerous benefits, including

- Reduced Downtime: By predicting equipment failures before they occur, maintenance can be scheduled during planned downtime, and reduce the impact on operations.

- Lower Maintenance Costs: Predictive maintenance allows organizations to replace or repair equipment before it fails and reduce the need for emergency repairs and overall maintenance costs.

- Increased Efficiency: By monitoring equipment in real-time, organizations can identify inefficiencies and optimize operations to reduce energy consumption and increase efficiency.

- Improved Safety: Predictive maintenance can identify potential safety issues before they become a hazard, reducing the risk of accidents and injuries

For example, consider a fleet of trucks used for transporting goods. Each truck is equipped with IoT sensors that collect data on factors such as speed, fuel consumption, and engine performance. This data is analysed using predictive maintenance algorithms to identify when maintenance is required. For example, if the algorithm detects a decrease in fuel efficiency, it may predict that the engine needs to be serviced. The maintenance team can then schedule a service appointment before the engine fails, minimizing the risk of costly breakdowns and repairs.

Condition Monitoring and prognostics Algorithms

It is used in a variety of industries to evaluate the efficiency and state of machinery and systems. Condition monitoring is the process of keeping an eye on the health of machinery or other equipment to spot any changes that could indicate a potential problem. Prognostics, on the other hand, is the process of determining an equipment’s or system’s future health using the data gathered during condition monitoring.

Algorithms for condition monitoring and prognostics are generally required to maintain the effectiveness and functionality of equipment and systems. By utilising these algorithms, organisations can reduce the risk of equipment malfunction or damage, reduce downtime, and boost productivity.

Start your journey towards understanding IoT today!

Click here

Conclusion

IoT sensing, to sum up, is a rapidly expanding field that uses sensors and other smart devices to gather and transmit information about the physical world to the internet or other computing systems. Organisations and individuals can make better decisions and achieve better results by using the data collected by these sensors to provide insights into a variety of processes and environments

Challenges Faced by C-Level Executives

Challenges Faced by C-Level Executives